ChatGPT's New Memory Feature

Enhanced Personalization with Privacy Implications

ChatGPT just received a major memory upgrade, and it is already reshaping how millions of people interact with artificial intelligence. The latest feature allows the chatbot to remember previous conversations and apply those insights to future exchanges. This may sound like a convenient breakthrough for regular users, but it introduces a layer of persistent surveillance that is easy to ignore until it is too late. For individuals using personal ChatGPT accounts in professional, academic, or civic roles, the consequences can be particularly severe.

What Changed with ChatGPT's Memory?

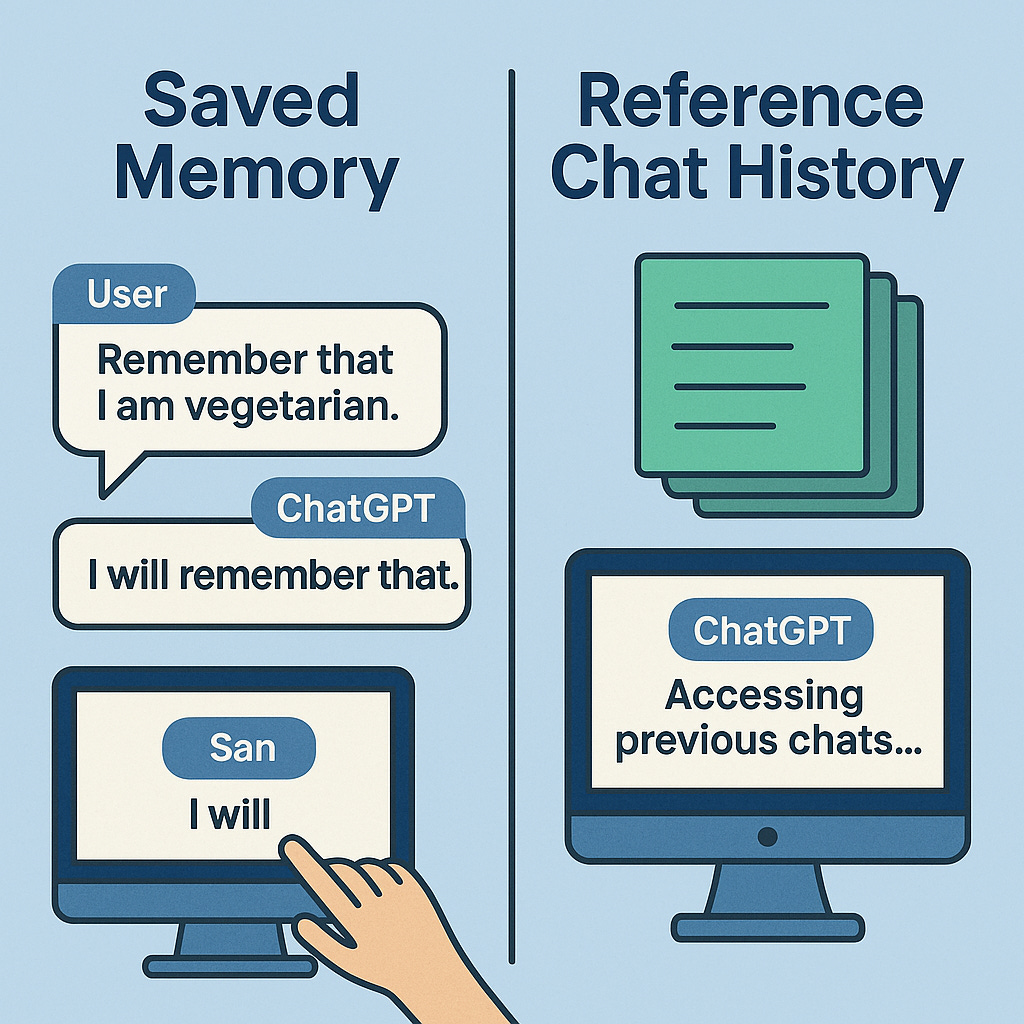

Before this change, ChatGPT had a relatively short-term memory. It remembered details only within a single session unless users manually instructed it to retain something. This model created a clear boundary between what was remembered and what wasn’t. However, on April 10, 2025, OpenAI rolled out a new memory feature that operates in two ways (Arstechnica, 2025):

Saved Memories: Users can tell ChatGPT to remember a specific piece of information, such as "Remember that I am vegetarian." This type of memory is intentional and can be reviewed or deleted (OpenAI, 2024).

Reference Chat History: This more advanced capability enables ChatGPT to automatically reference your entire conversation history across sessions. It pulls in content, tone, preferences, and past requests to generate more personalized responses—even if you didn’t ask it to remember anything (TechCrunch, 2025).

This update makes ChatGPT act less like a helpful assistant and more like a tool that quietly builds a psychological profile over time.

Who Gets the Upgrade, and Who Takes on the Risk?

The memory upgrade initially rolled out to ChatGPT Plus and Pro users. OpenAI has stated that Team, Education, and Enterprise subscribers will gain access within weeks. The feature, however, is not being deployed in jurisdictions like the EU, UK, Switzerland, and several Nordic countries due to privacy regulations (TechCrunch, 2025).

In the U.S., and in many regions without stringent privacy protections, everyday users, including private and public sector employees, often rely on personal ChatGPT accounts. These are used on unsecured networks, without organizational controls, and in some cases without user awareness of the memory upgrade. This opens the door to a range of long-term risks:

Conversations about strategic planning, internal procedures, or executive decision-making may be stored indefinitely

Personal notes copied into ChatGPT for writing reports or preparing summaries can become searchable context

Legal or regulatory content drafted with AI assistance may be referenced later, even after the project ends

In shared device environments, a future user could unknowingly trigger memory recall, disclosing information from a completely unrelated session. The boundary between private and persistent becomes blurred.

What's Being Remembered, and Can You Stop It?

OpenAI offers several tools to manage memory:

Disable memory completely under "Settings > Personalization"

Use "Temporary Chat" for sensitive discussions that should not persist

Ask ChatGPT what it remembers about you for transparency

Delete specific or all saved memories at any time (OpenAI, 2024)

However, these settings are not immediately visible, and memory remains active by default for anyone who previously enabled it. Many users do not know this, particularly if they rely on default account configurations. The controls exist, but so does the complexity.

Real Privacy Risks That Go Beyond Convenience

This upgrade comes with several risks that are not hypothetical. They are already emerging in practical use cases.

1. Data Leakage Between Sessions

Users who rely on ChatGPT for drafting proposals, summarizing meetings, or outlining internal processes risk having that information surface in unrelated conversations. Without intentional deletion or temporary mode usage, personal and organizational information becomes part of the system’s working context.

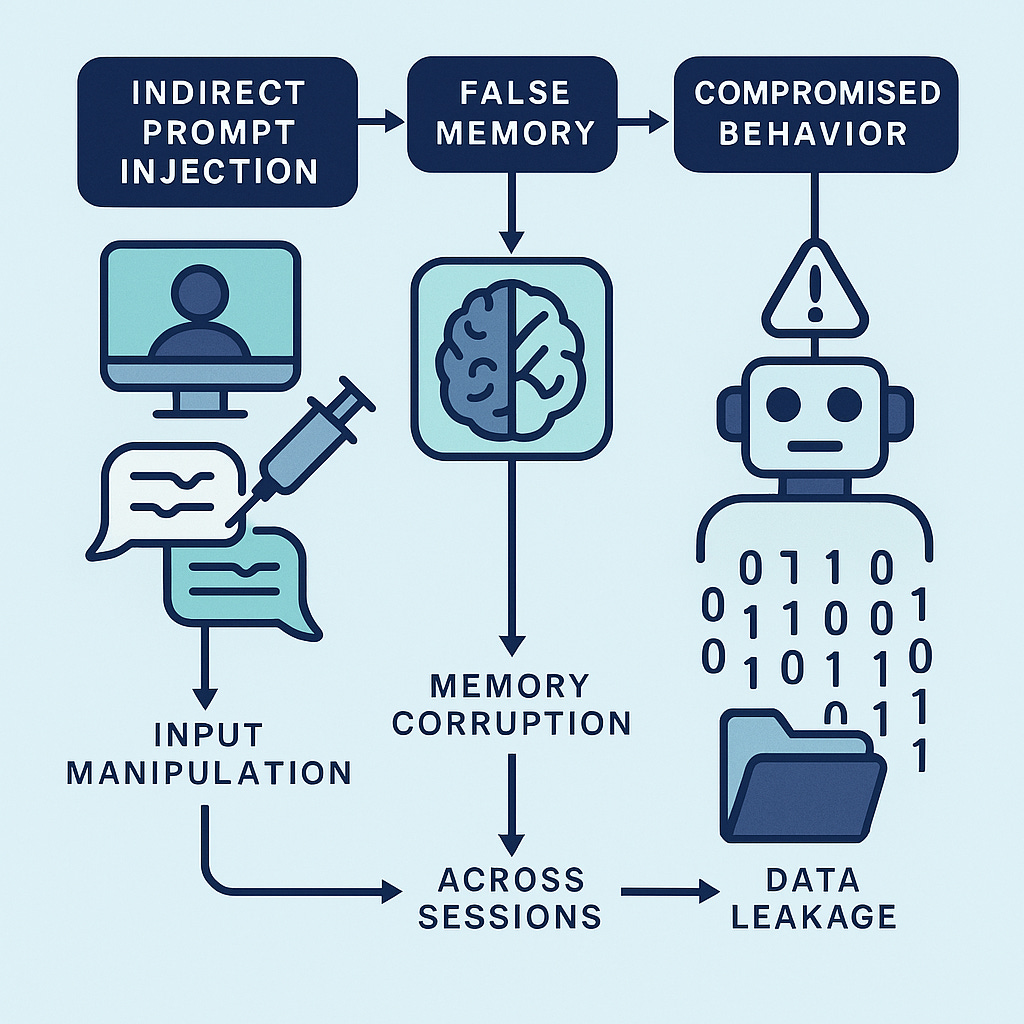

2. Memory Manipulation

Researchers have shown that ChatGPT can be fed malicious input designed to alter its memory. Using indirect prompt injection, attackers can:

Implant false memories

Instruct the model to carry hidden tasks

Cause the assistant to misbehave or exfiltrate sensitive data (Futurism, 2024)

This transforms ChatGPT from a productivity tool into a potential attack vector.

3. Lack of Visibility and Oversight

Until early 2025, OpenAI allowed users to view and audit memory content directly. That interface was removed without much notice. Now, users must rely on vague summaries or generic responses when asking ChatGPT what it remembers. This removes a key layer of accountability (Arstechnica, 2025).

What Happens When Workplaces Don’t Have an AI Policy?

Organizations without formal AI policies are the most vulnerable. Staff may believe they are exploring productivity tools on their own time, but in reality, they are extending their institution’s risk perimeter in ways that are difficult to monitor or reverse. The combination of personal accounts, unsanctioned AI use, and the new memory feature makes it alarmingly easy to lose control of sensitive information. Consider these examples:

A housing program coordinator pastes confidential intake data into ChatGPT for analysis, unaware that the information is retained across sessions or could be referenced later by the model.

A public school teacher develops assessments using AI-generated prompts that are retained without disclosure, possibly embedding copyrighted material or student performance indicators.

A city planner drafts language for a bond referendum in a personal ChatGPT account, and that language is later surfaced in a different project or inadvertently shared due to shared device access.

A communications officer uploads a document to help summarize a policy brief, not realizing that extracted facts and language fragments may become part of the model’s working memory.

An administrative assistant uses ChatGPT to create a grant proposal and unknowingly trains the system with sensitive demographic or funding data, which may later be offered as context in unrelated prompts by others using the same account or organizational domain.

These scenarios illustrate how data can be unintentionally shared not just through prompts, but through knowledge bases that ChatGPT builds silently over time. The line between private insight and shared recall begins to erode quickly without oversight.

In these cases, personal AI use is indistinguishable from organizational behavior. Without guardrails, unintentional data exposure becomes inevitable.

Governance challenges compound when leaders:

Do not provide guidance on acceptable AI use

Assume employees are not using ChatGPT at all

Delay investment in secure tools or enterprise versions

In this climate, a well-meaning employee trying to get ahead of a deadline could create liabilities with legal, reputational, or compliance implications.

How to Manage the Risk: For Users and Employers

Mitigating risk requires effort on both individual and organizational levels. Here’s what that looks like:

For Individual Users

Turn off memory when you are uncertain about long-term use

Always use "Temporary Chat" when handling confidential information

Avoid putting names, sensitive data, or strategic details into ChatGPT without a policy in place

Routinely check what the model remembers and clear it if necessary

For Employers and Leaders

Issue formal guidance on whether and how staff should use generative tools

Purchase secure enterprise-level licenses with administrative controls

Deliver training on risks, memory behavior, and how to disable it

Log AI-generated content or require documentation of AI use in formal work

Monitor adoption trends so you can stay ahead of unauthorized use

Taking these steps helps build a digital culture of responsibility and foresight.

Suggested Visual: Side-by-side checklist for users and employers.

Personalization or Privacy? Why the Tradeoff Deserves Scrutiny

OpenAI CEO Sam Altman has described the memory feature as a way for ChatGPT to grow alongside the user, shaping itself based on your history and preferences (Tom's Guide, 2025). This promise of deeply personalized AI experiences will appeal to many users—but personalization is not neutral. It depends entirely on data retention and interpretation.

That means organizations, especially those serving vulnerable populations or managing public funds, must evaluate this upgrade critically. The default settings favor data collection. Without additional layers of governance, users may not even realize how much is being tracked. And once that data is referenced in a different context, the damage may already be done.

Closing Thoughts

ChatGPT's memory upgrade signals a shift in how AI will operate moving forward. This is not just about saving user preferences. It is about shaping behavior, enabling more persistent models of interaction, and potentially automating long-term insights into people’s habits, decisions, and roles.

For users, this demands greater awareness. For employers, it demands policy, investment, and leadership. In both cases, the goal is not to abandon these tools—but to use them wisely, with clear eyes and full understanding of what memory really means in the world of AI.

References

Apex. (2024, December 18). 6 Hidden Security Risks in ChatGPT Enterprise. https://www.apexhq.ai/blog/blog/6-hidden-security-risks-in-chatgpt-enterprise/

Arstechnica. (2025, April 10). ChatGPT can now remember and reference all your previous chats. https://arstechnica.com/ai/2025/04/chatgpt-can-now-remember-and-reference-all-your-previous-chats/

Futurism. (2024, September 29). You Can Insert False Memories Into ChatGPT, Researcher Finds. https://futurism.com/the-byte/insert-false-memory-chatgpt

Iona.edu. (2024, May 2). Manage AI Privacy & Data - Artificial Intelligence: For Students. https://guides.iona.edu/c.php?g=1398358&p=10605330

OpenAI. (2024, February 13). Memory and new controls for ChatGPT. https://openai.com/index/memory-and-new-controls-for-chatgpt/

TechCrunch. (2025, April 10). OpenAI updates ChatGPT to reference your past chats. https://techcrunch.com/2025/04/10/openai-updates-chatgpt-to-reference-your-other-chats/

Tom's Guide. (2025, April 10). OpenAI just gave ChatGPT a much better memory — here's what it means for you. https://www.tomsguide.com/ai/chatgpt-just-got-a-huge-memory-upgrade-heres-why-its-a-big-deal

Let me know your thoughts about the new memory feature!

Nice to see your views on an important issue. I like you included sources.

Thanks