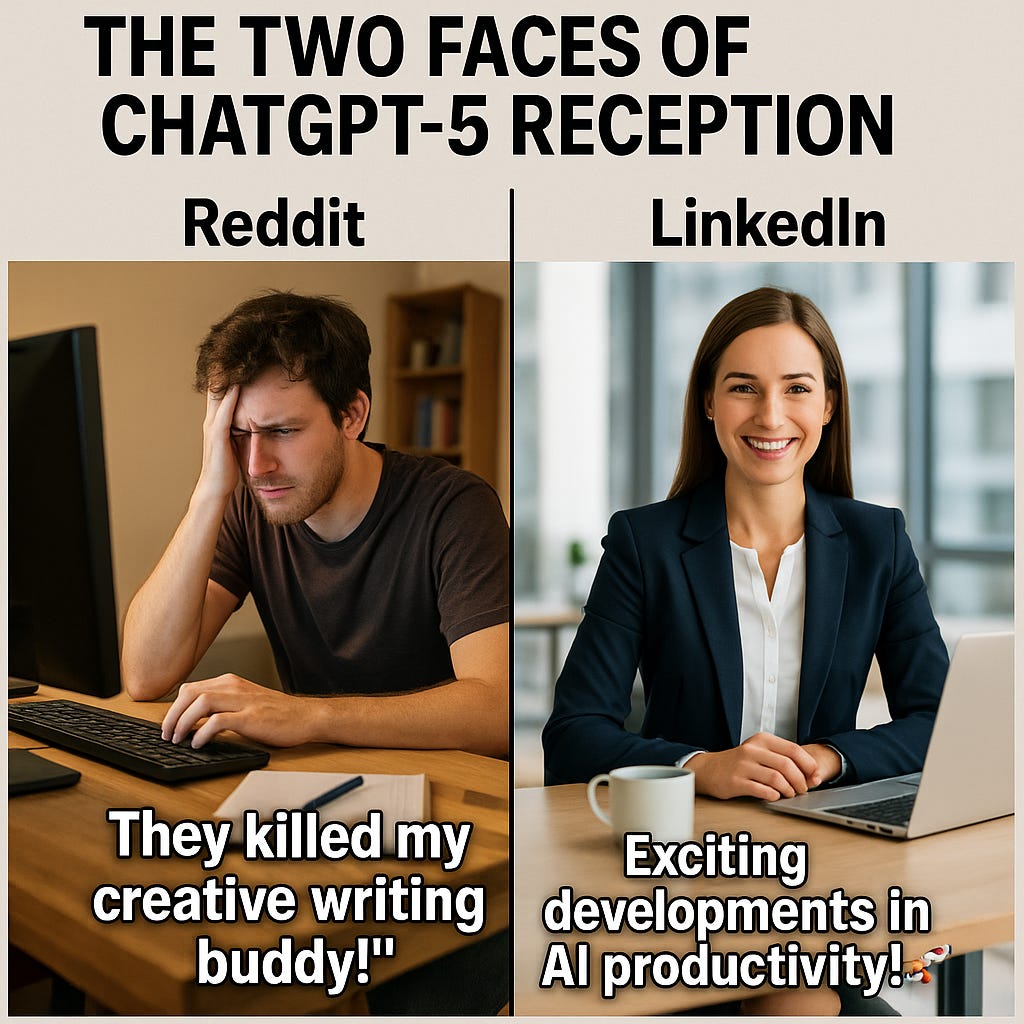

When OpenAI released ChatGPT-5, they likely expected a predictable mix of enthusiasm and skepticism, the same pattern that has followed every major AI release. What they may not have anticipated was how sharply the reaction would split depending on where you looked. On Reddit, users described it as a step backward, claiming it had lost the spark that made earlier versions engaging. Over on LinkedIn, professionals were praising its improved accuracy, polished tone, and productivity-focused enhancements. The model itself had not changed from one platform to the other, yet the reception could not have been more different. This is not just a story about software upgrades. It is a case study in how community culture shapes the way we judge technology.

The Reddit Rebellion

Reddit’s response was swift and blunt. Popular threads with titles like “GPT-5 is horrible now” gathered thousands of upvotes, with users describing the changes in personal terms rather than purely technical ones. Comments such as “They killed my creative writing buddy” summed up the feeling that something deeper than accuracy had been lost. For these users, ChatGPT was not simply a tool. It had been a creative partner, capable of wandering into unexpected territory, throwing in humor, and exploring tangents that made conversations feel alive.

The new version is more precise, delivers cleaner facts, and stays on track. For business users, this is an improvement. For Reddit’s creative crowd, the loss of unpredictability feels like losing a friend. One comment called it “the epitome of perfection, but perfection feels sterile,” capturing the discomfort of a tool that had become more polished but less personal. This reaction fits Reddit’s culture, which rewards authenticity and embraces quirks as part of genuine interaction.

The LinkedIn Love Affair

LinkedIn’s reception tells a very different story. Here, business leaders, consultants, and AI trainers highlighted how much more reliable GPT-5 had become. The reduced error rate and more structured responses were seen as major gains. Words like “efficiency,” “consistency,” and “reliability” appeared repeatedly in posts. For professionals preparing proposals, producing research summaries, or delivering reports to clients, those qualities are not optional. They are essential.

This reaction aligns with the platform’s culture. LinkedIn favors polished, solution-oriented content. Posts that succeed here tend to be constructive and forward-looking, and even criticism is framed as an opportunity for improvement. In this environment, GPT-5’s cooler, more businesslike tone is not a drawback. It is a sign that the tool is ready for professional use.

Why the Divide Exists

The contrast between the two platforms is driven by four key differences. Reddit and LinkedIn do not just have different audiences. They have different values, use cases, and risk tolerances. Reddit draws a mix of hobbyists, students, writers, and developers who often use AI for creative or casual interaction. LinkedIn is populated by executives, entrepreneurs, and industry specialists who need reliable business outputs. On Reddit, personality and originality are highly valued. On LinkedIn, credibility and professionalism take priority.

The way each group uses AI reflects these priorities. Reddit users might ask for fictional role-plays, unusual brainstorming, or speculative scenarios. LinkedIn users are more likely to request market analysis, email drafting, or formal writing. The tolerance for mistakes is also different. Reddit’s audience will often forgive inaccuracies if the result is engaging. Professional users generally cannot take that risk.

The Influencer Factor

There is another dimension to consider. Many LinkedIn influencers have a professional incentive to be optimistic about new AI releases. Positioning themselves as early adopters and innovators benefits their reputation. This does not make their feedback dishonest, but it does shape the tone and focus of their reviews. On Reddit, where anonymity is common and influence is measured in upvotes rather than contracts or partnerships, there is little penalty for blunt criticism and sometimes a reward for it.

Lessons for Public Sector Leaders

For leaders in government or nonprofit organizations, the ChatGPT-5 divide offers more than an interesting contrast. It is a reminder that cultural fit matters as much as technical capability when adopting AI. Teams that value flexibility and human-like interaction may find a highly structured AI stifling. Teams that prioritize accuracy and compliance may prefer it.

Before choosing an AI tool, it helps to define what success looks like. For some, success means creative problem-solving and an engaging tone. For others, it means consistent outputs that require minimal fact-checking. Setting expectations is also critical. What feels like a significant improvement for one group could feel like a downgrade for another. In some cases, the best approach is to use different models or configurations for different teams, allowing creative groups to work with more personality-driven tools and compliance teams to use stricter, accuracy-focused systems.

Beyond ChatGPT-5

This will not be the last time we see a split like this. Every major AI update will please some users and frustrate others. There is no single “best” version for everyone. The most effective choice will always be the one that fits the needs and culture of the people using it. For public sector leaders, this might mean creating an AI ecosystem rather than relying on a single platform.

My Perspective

Both perspectives are valid. Reddit’s creative users are right to feel the loss of spontaneity, and LinkedIn’s professionals are right to value greater precision. The best path forward would give users the ability to choose between a creative mode and a professional mode, but until then, organizations can bridge the gap by offering multiple options and training people on how to get the most from each.

The Takeaway

The conversation about ChatGPT-5 is not just about algorithms or features. It is about people, expectations, and the culture of the communities where those conversations take place. If AI adoption is going to succeed, the technology must fit the people who use it. That requires listening, adapting, and tailoring solutions so they feel right for each context. The most advanced tool in the world will only succeed if it works in harmony with the people who rely on it.